Securities & Investment Institute

Annual Debate

Mansion House, London

Wednesday, 14 January 2009

“In this current financial environment, more financial regulation is a major part of the solution”

For the motion:

Dr Vince Cable MP

Mr Alan Yarrow FSI

Against the motion:

Professor Michael Mainelli FSI

Mr David Bennett FSI

Chairman – My Lord Mayor, Ladies and Gentlemen.

Chairman:

Mr Christopher Jones-Warner FSI

Learn Chinese In An Afternoon

[An edited version of this article first appeared as “How To Learn…Chinese…In An Afternoon”, Professor Michael Mainelli ( 麦(mài)迈(mài)克(kè)), The Linguist, Volume 47, Number 3, page 20, Chartered Institute Of Linguists (June/July 2008)]

Continue readingABCB – New Alphabet For Standards – Association of British Certification Bodies

My Lord, Ladies and Gentlemen. It is a real honour for me to have this opportunity to address a group of people who share my passion that standards markets can improve the world, this Association of British Certification Bodies. This is a personal address, not speaking as a non-executive director of UKAS, though I realise that probably had a bearing on inviting me here. My remarks to follow are not meant in any way as UKAS policy.

Yet non-executive directorships are not filled for money – the risks are high, the time commitments always exceed the estimates and the thanks are low – nor are directorships filled with love. If seeking a non-executive directorship is the first sign of madness; the second sign is probably taking one. In return, we non-executive directors can be your worst nightmare. In my case it’s because I have a passion for world trade and sustainable economies that I would like share with you.

To start with, I’d like to explore standards themselves. Standards are funny things. Because of my accent, I’m going to start with pronunciation standards. Because the name of the International Organization for Standardization (ISO) would have different abbreviations in different languages (IOS in English, OIN in French), back in the 1940’s it was decided to use a language-independent word derived from the Greek, isos, meaning “equal”. Therefore, the short form of the Organization’s name is always ISO – “I-S-O” – and ISO follows the “z” spelling as in “organization” and “standardization”. Is that clear? ISO’s recommendation on their website is to pronounce their name whichever way comes most naturally. “So, you can pronounce it “EZO”, “EYE-ZOH” or “EYE-ESS-OH”, we don’t have any problem with that.” What a great credential for flexible standards!

And then we have date standards – oh no, I’m not talking about the US month first versus the UK day first, or even the Chinese year first. For 38 years ISO has designated World Standards Day to recognize the thousands of experts worldwide who collaboratively develop voluntary international standards that facilitate trade, spread knowledge and share technological advances. ISO officially began to function on 23 February 1947, but 14 October was chosen as World Standards Day because on 14 October 1946 delegates from 25 countries met in London and decided to found ISO. Of course, in the spirit of standards, in 2006 India, Ghana and others celebrated World Standards Day on 13 October while Nigeria celebrated from 12 to 14 October. In 2007 the European Commission held its World Standards Day conference on 17 October, while the United States celebrated World Standards Day on 18 October. Need I say more?

The truth is that the world is a messy place. Moreover, it’s human nature to resist standards, or at least male nature. A friend of mine, Paul, is raising three boys on his own. When I asked him how he kept the house clean he explained, “Michael, men don’t have standards, women do. Men have thresholds.”

The objective of standards is to help the evolution from complete mess to complete order by putting things in boxes at the right time. Managing evolution isn’t easy. Sometimes we try to box things in too early. Other times we’re so late we just add cost and unnecessary complexity to existing commodities. But done right – ahh, there we add a lot of value to consumers, to business and to society. I recently conducted a large study with PricewaterhouseCoopers and the World Economic Forum looking at solving global risks, “Collaborate or Collapse”. We concluded that society solved global risks using four collaborative approaches – sharing knowledge, implementing policies, markets and, yes, standards.

Sometimes I wish we could have a better sense of humour about it all. I’d like us to avoid going down the path of political correctness and keep our largely scientific and engineering outlook on life and its problems. Sadly, certification and standard jokes are rarer than one might like. Perhaps we should have a comedy kite-mark. Fortunately, the only after-dinner/lunch joke on standards I know doesn’t concern an ABCB member. It is about certification taken to extremes over wine.

Two oenologists are trying to outdo each other on their exacting standards. They both grab a tasting glass of red wine from the examination table in front of them. Inhaling deeply, the first wine expert remarks that “this wine is an outstanding Bordeaux”. The second interjects, “particularly when you recognise the difficulties inherent in raising vines of character in Côtes de Bordeaux-Saint-Macaire”. “Indeed”, says the first, “and as this wine is from Saint-Macaire, the terroir in that area most suited to this interpretation of the Malbec grape is, I’d suspect, Château Malromé”. “Ahhh”, counters the second, “self-evidently Château Malromé, but clearly the south-facing side, near the old well.” “Elementary really”, replies the first oenologist, “and probably the fifth row, slightly higher up the hill”. “Mais bien-sur”, adds the second oenologist gaining the upper hand by saying, “though I’d say a late summer picking from the eighth vine in the row and, dare I add, probably by picked by Pierre.” “Well”, says the first, now delivering what he believes to be the fatal blow, “of course I detected Pierre’s hand on the grapes, after a cool morning and a late dejeuner. Though his post-prandial micturation infuses this wine with a somewhat disagreeable undertone.” “Naturally it does” says the second oenologist rather coolly, “as one must certainly ask why-oh-why did Pierre drink such an inferior claret for lunch?!”.

But standards are not just about quality and one-up-manship. Adam Smith advanced the metaphor of “the invisible hand”, that an individual pursuing trade tends to promote the good of his community. Yet the Doha round is stymied. Valid social and ethical concerns transmogrify into trade restrictions. Property rights are a battleground, from carbon emissions to intellectual capital. Already, emerging carbon standards are being sharpened as weapons in future carbon dumping wars. Our state sectors swell out of recognition, crowding out the private sector that delivers value. Standards and certification markets exist to improve the functioning of global markets and trade, and even to inject market approaches into monopolistic service delivery.

Adam Smith knew that markets alone are not enough. Smith’s argument is too rich to take after an excellent meal, but what I admire about certification bodies is that you exemplify Smith’s Moral Sentiments of Propriety, Prudence, and Benevolence, combined with Reason. As ABCB members you do set high standards, think to the long-term, explore new ways to help society advance and make business and government think about risk. You realize that there is more to economic life than money – as comedian Steve Wright says – “You can’t have everything, where would you put it?”

Things change fast with trade. Looking back to post-war Japan and thinking of Japan today reminds me of the apocryphal quality control tale about relevant standards. A western company had some components manufactured in Japan in a trial project. In the specification to the Japanese, the company said that it would accept three defective parts per 10,000. When the shipment arrived from Japan, the accompanying letter stated something like: “as you requested, the three defective parts per 10,000 have been separately manufactured and have been included in the consignment. We hope this pleases you.”

Today China is the sobering reminder of the importance of trade. I heard a great sound-bite at the IOD China Interest Group two years ago, “we’ve had a commercial break these past 200 years, but now we’re back, on air”. In the 18th century China was the world’s biggest economy, with a GDP seven times that of Britain’s. But China closed its doors to trade missing the industrial revolution, the capital revolution and the information revolution. There is a children’s joke that “you should never meddle in the affairs of dragons, because you are crunchy and taste good with brown sauce.” But we must mix-it-up with the Dragon. Money is odourless and poverty stinks. We must reach out to all the returnees to world trade. And we must ensure that our own standards markets are open and competitive, in turn helping world trade be open and competitive.

So, is today’s luncheon talk supposed to be slick & humorous, a call to arms or an academic lecture? Actually I want to end by emphasising the importance of conflict. Regulatory capture is a phenomenon in which a regulatory agency which is supposed to be acting in the public interest becomes dominated by the vested interests of the existing incumbents in the industry that it oversees. In public choice theory, regulatory capture arises from the fact that vested interests have a concentrated stake in the outcomes of political decisions, thus ensuring that they will find means – direct or indirect – to capture decision makers. Conflict and competition, not calm quiescence or silence, are key signs that things are working well in standards markets.

Accreditation and certification only work when the entire system is a market system, not a bureaucratic one. We are good, but we can do better. For example,

- development of a standard should be an open process involving interested stakeholders, but many ISO affiliates typically charge three figures for short documents that could be supplied electronically at no charge;

- despite our claims for openness, transparency and public benefit, certification agencies often fail to be open oto the general public about whom they’ve audited for what. Outputs such as certifications and grades awarded could be better published so that they can be validated – yet the industry complains about the ‘grey’ certification market;

- accreditors must be vigilant regulators and ensure the separation of standards development from the commercial elements of implementation and review. Yet accreditors must be realistic and engage in meaningful dialogue with the industry while avoiding regulatory capture.

I could go further and talk about the widest view of standards from financial audit through to social, ethical and environmental standards with which I also work. I’d even mention that to me, ideally, certifiers should bear some indemnity that can, with the price paid by the buyer, be made publicly available. Developing countries rightfully worry that “the things that come to those that wait may be the things left by those who got there first”. Sustainable commerce means doing things differently. We must clasp the hands of the developing countries, support the invisible hand of commerce, restrain the visible hand of government and slap the grabbing hands of special interests. We must prove that a global Commerce Manifesto deserves to replace a soiled Communist Manifesto. We must keep our standard and certification markets open, transparent and competitive.

Standards markets are the great alternative to over-regulation or naked greed. We professionals committed to standards prevent both the abuse of capitalism, red in tooth and claw, and the abuse of government regulation, 1984 but without Orwell’s sense of humour. We open up trade. Let’s sell standards markets as the new third way to the sustainable economics everyone wants.

On behalf of all the guests I salute the ABCB’s hospitality and its great work on behalf of standards markets. Thank you!

London Accord – Sharing Research To Save The Planet

After two years of hard work led by Jan-Peter Onstwedder and me, we finally launch the London Accord at Mansion House on the evening of 19 December.

Grainy but true – l to r: Rt Honourable Lord Mayor David Lewis, Rt Honourable John Sutton MP, Sir Michael Snyder, Professor Michael Mainelli

My Lord Mayor, Your Excellencies, My Lords, Secretary of State, Alderman, Sheriffs, [Councillors, Distinguished Guests,] Ladies and Gentlemen… – it is my great pleasure to have this opportunity to tell you tonight about the London Accord.

The London Accord’s theme is “cash in, carbon out”. The London Accord provides informed views about climate change investment and sets out a methodology for evaluating those investments. The London Accord began in 2005 at almost the same time as the Stern Review. Sir Nicholas said last year that “climate change is the greatest market failure the world has seen”. While I admire many aspects of the Stern Report, I beg to differ with this specific point.

Markets haven’t failed. Markets have done what markets do, set prices and transfer resources and risks. In the case of climate change, what we have is an absence of a market. Markets and investors have acted accordingly. Events in Bali last week change all that. Henceforth, society will turn greenhouse gas emissions into a property that can be capped, traded, and reduced – and we must factor these emission costs into all investment decisions.

Why does the London Accord matter? Well, for a start, the publication of the London Accord matters to us because we have been working on it for over two years, but the London Accord should matter to everyone. The comedian Jay Leno once quipped, “According to a new UN report, the global warming outlook is much worse than originally predicted. Which is pretty bad when they originally predicted it would destroy the planet.” The London Accord matters because the financial services community says, if society is prepared to pay, commerce can stop global warming.

Our future scenarios for greenhouse gas emission prices are double today’s €20 per tonne of CO2, more like €40 per tonne of CO2. In rough terms, we need to reduce the CO2 emissions per Briton from 10 tonnes to one or two tonnes. At around €40/tonne that’s about €300 per person or about €1,200 per family per year. It’s going to be quite a different world.

Private sector investment is crucial to climate change investment (86% of capital investment in energy supply must be from the private sector – UNFCCC). Much of that investment will be funded through large pension funds and asset managers who rely on analysis by the financial services sector for investment decisions. So what did the London Accord team conclude?

• Energy investment is going to become much, much riskier;

• Investors should invest now. At prices per tonne of CO2 over €30, investment portfolios can constructed that produce both attractive financial and ‘carbon returns’.

• Forestry is a big unknown – there is a need to narrow the range of credible estimates for abatement and costs of forestry projects, as well as solidify carbon offset markets for forestry.

• Efficiency gains continue to show great potential for financial and carbon returns but may need behavioural incentives such as regulation.

• Carbon capture and sequestration/storage (CCS) seems an unrealistic investment today.

Moreover, financial services leaders understand the need to collaborate or collapse. The London Accord is a great ‘open source’ research project – the largest-ever private-sector investment collaboration into climate change, representing work valued at £7million ($15million). Buy-side firms such as Universities Superannuation Scheme, Insight, and Legal & General helped sell-side firms and analysts shape the project to ensure its outcomes would be useful to investors. Observers from the EU, the International Energy Agency, the United Nations Framework Convention on Climate Change and others have been involved.

In the time available, I must turn to thanks, and there are far too many. The London Accord has truly been a cooperative effort. Jan-Peter Onstwedder and I recorded nearly 500 thanks in the CD-ROM you will receive tonight, and still we missed people. However, on such a special evening there are a few I must single out. First, I would thank my team at Z/Yen, including Ian Harris, Linda Cook, Mark Yeandle, Kevin Parker, Liz Bailey and Alexander Knapp, who put up with two years of stress. BP staff worked throughout on the London Accord, and here I would single out Tessa Marwick, Andrew Vivian, James Palmer and Sanet Phillips. Gresham College’s Lord Sutherland and Barbara Anderson helped to kick things off and generously provided facilities, including a technical seminar at Gresham College we’re having on 30 January 2008 to which all of you are welcome. Henry Thoresby and Sir Howard Davies gave us excellent support from the LSE community pulling the threads together.

The best way to thank the contributors, the important people who did all the work, is to enumerate their reports:

First we had two papers setting the context:

• Alexander Evans, Center on International Cooperation at New York University & David Steven, River Path Associates, wrote “Climate Change: the State of the Debate”, examining how climate change rose above other global issues;

• Nick Butler, Cambridge Centre for Energy Studies set out “The Forces of Change in the Energy Market”.

Then, the heavyweights analysed the investment opportunities:

• Solar Energy – Eckhard Plinke and Matthias Fawer, Bank Sarasin

• Investing in Biofuels – Conor O’Prey, ABN AMRO

• Investing in Renewable Energy – Mark Thompson, Canaccord Adams

• The Global Case for Efficiency Gains – Miroslav Durana, Tanya Monga and Hervé Prettre, Credit Suisse

• Energy Efficiency – Asari Efiong, Merrill Lynch

• Carbon Capture and Sequestration – Marc Levinson, JPMorgan Chase

• Emissions Trading – Andrew Humphrey and Luciano Diana, Morgan Stanley

• Forest Assets – Stephane Voisin and Mikael Jafs, Cheuvreux

A number of us examined the wider impacts:

• Credit Risk – Christopher Bray and Dr Richenda Connell, Barclays and Acclimatise

• Carbon Intensity – Valéry Lucas-Leclin, Société Générale

• Sustainable Investment Solutions – Alice Chapple, Vedant Walia and Will Dawson, Forum for the Future

• The Legal Issues – Lewis McDonald, Herbert Smith

• Climate Change Investment and Policy Portfolios – James Palmer

Finally, some of us considered the policy implications

• Technological Development – J Doyne Farmer & Dr Jessika Trancik, The Santa Fe Institute

• Emission Standards – Steven Davis, The Climate Conservancy

• Product-Level Standards – Hendrik Garz: WestLB

• Philanthropy – Davida Herzl, NextEarth Foundation

• Carbon Markets and Forests – Eric Bettelheim, Gregory Janetos and Jennifer Henman, Sustainable Forestry Management

• Cap-and-Trade Versus Carbon Tax – Alexander Knapp, Z/Yen, Jan-Peter Onstwedder

The full publication, The London Accord: Making Investment Work For The Climate, contains 25 reports in 780 pages.

Very early on we formed a governance team consisting of the early supporters, each of whom gave freely of their time and whom I would like to thank personally:

• Alice Chapple from Forum for the Future

• Simon Mills from the City of London Corporation

• Chris Mottershead from BP plc

• Alexander Evans from New York University’s Center on International Cooperation

Before closing, I would like to move on to three special thanks. The first is a personal and corporate thank you to the City of London Corporation. Without the Corporation’s resources this project would be a pale shadow of what it is tonight. The personal part is to thank Michael Snyder, Chairman of the Policy & Resources Committee, for putting his drive, intellect and charisma behind the London Accord so early on. People remark that it seems harder and harder for government and commerce to work together. That may be true, but when you see the City of London accomplishing so much globally, it’s hard to remember it’s just our local council.

Second, my heartfelt thanks must go to Jan-Peter Onstwedder and all the support we had from BP and, in particular, Vivienne Cox. Jan-Peter was the Project Director from last year, well before formally joining the project. Jan-Peter has diplomatic and organisational skills of which I can only dream. Jan-Peter should be giving this talk, but is, as ever, too modest. Jan-Peter applied his intellectual, social and organisational skills with the determination to show that financial services can make difference to climate change. It was a privilege to work with Jan-Peter this year.

Finally, I would like to especially thank you, my Lord Mayor. Two years ago you had the foresight and courage to lend this crazy idea your valuable support. Two years later you have the generosity and kindness to lend us your home for this magnificent event. You have been stalwart throughout and I hope that the London Accord publication is a fitting tribute to your concern, your passion and your vision of London’s financial services industry at the front of the fight against climate change. In your year in office, which has started so brilliantly, I wish you the highest success in all of your endeavours in office, from the ceremonial to the commercial to the charitable.

The London Accord demonstrates that the financial services sector understands well the future implications of climate change. A man once reproached William Shatner, who played Captain Kirk in Star Trek: “On your show, you had Russians, Chinese, Africans, and many others – why did you never have a character of my nationality?” Shatner supposedly replied, “You must understand that Star Trek is set in the future.” The London Accord is about our future and we would like to make sure that all nationalities are there, tropical, temperate or arctic; mountain top or sea-side.

Financial services is stereotyped as a selfish, self-centred industry. Over the past two years the collaboration and sharing of the London Accord has proved that stereotype wrong. The London Accord makes me proud to work in financial services. You should all be proud too.

Thank you.

Barging In

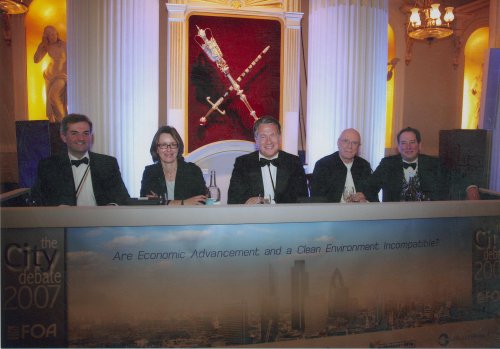

The City Debate: Are Economic Advancement & A Clean Environment Incompatible?

The City Debate 2007 at Mansion House, 29 January 2007

[left to right]

Chris Huhne MP (Liberal Democrats’ Shadow Environment Food and Rural Affairs Secretary), Emma Duncan (Deputy Editor, The Economist), Rt Hon Michael Portillo, Richard D North (Fellow, Insitute of Economic Affairs), Professor Michael Mainelli (Executive Chairman, Z/Yen Group)

The somewhat awkward motion was “Green and Growth Don’t Go” (together implied) though the invitation was “Are Economic Advancement and a Clean Environment Incompatible?”. The audience was over 300 people from finance.

Professor Michael Mainelli’s opening statement to engage the audience was:

Chairman – My Lord Mayor, Ladies and Gentlemen.

Anyone with a waistcoat like mine knows that Green and growth don’t go together. Tonight I am that kid who told you that Santa Claus didn’t exist. Green and growth is a lie-for-children alongside Santa Claus, the Easter Bunny, Nessie, immaculate conception, intelligent design and honours-on-merit.

Let’s face one inconvenient truth – this spinning rock we live on is currently over-run by a bunch of trumped-up naked apes who have three primate imperatives:

- find bright, shiny fruit;

- avoid snakes;

- if you see someone attractive, let them know in a totally satisfying way that you find them attractive.

Three simple imperatives have led to 6.5 billion of the pests monkeying with the world’s thermostat. Way up in the white ivory trees, some brainy apes don’t fulfil imperative three very often. These apes sublimate their urges by dreaming up theories. Their current brainy theory: the moist fringe of this spinning rock is getting all steamy.

Do we know that CO2 levels are rising? Yes. Is there is a credible theory of global warming? Yes. Does this imply big changes over the next century? Yes. So what’s to debate? Well, other brainy apes down on the jungle floor sublimate their urges playing trading games and inventing theories about alpha returns. Jungle-floor apes have a theory: global warming can be stopped if everyone changes their economics a little bit. However, preventing global warming requires massive changes in economics and human nature within this decade, and human nature won’t change in time.

At Mansion House we can debate with real numbers. This is the City, not Westminster.

In 2004, gross worldwide emissions were about 7bn tons of carbon. Emissions are projected to grow to 14bn tons by 2054. For long-term stability, emissions in 2054 must be at or below today’s 7bn tons, and decreasing. Green and growth fans need a 7bn ton cut from “business as usual” by 2054. Socolow at Princeton identified 15 reasonable opportunities, called “wedges”, that would each cut 1bn tons by 2054. One wedge – convert 250 million hectares to biofuels, 1/6th of the world’s cropland. Another wedge – 2 million wind turbines on 30 million hectares, a Germany of wind turbines. Remember we need at least seven of these megaprojects in the next 47 years. They’re very realistic plans. Each wedge costs more than the GDP of China.

Global GDP was $44 trillion in 2005. Stern expects people to pay $440 billion each year to prevent climate change, $125 billion in the USA, $20 billion per annum in the UK. Starting this year. And the CO2 will still exceed 500 ppm. This is incredible, by which I mean it’s not believable. I don’t want a repeat of my Westminster experience on Nuclear Electric decommissioning. “Yes Minister, that number is possible, but certainly not probable!”

The UK has 1% of world population, but emits 2.2% of greenhouse gases. The FTSE 100 alone produce 1.6% of global greenhouse gases. The average person in the UK produced as much CO2 by 6 January as the average person in the world’s poorest countries will all year. You really think this will change?

We are natural optimists in the City. We want to believe that we can do good; we can be green; the bad are punished and the good are rewarded; every story has a happy ending. We fixed the ozone layer; we handled sulphur dioxide well. But these weren’t 50-year, $22 trillion problems. You’ll point to emerging carbon markets we’d all love, but right now carbon credits are little different than the Catholic Church selling indulgences. And that leads us to human nature – sex, greed, fear and apathy.

We breed. By 2054, there will be 9.5 billion of us. Even if Britain stopped all GHG emissions, within two years China’s growth makes that effort worthless. What’s the point if Brazil, India, Russia and China just keep going?

Sure people care but CO2 is tasteless and odourless, while I must have missed seeing us stop a million children a year dying of preventable HIV and measles, 1 million of malaria, 1.5 million of diarrhoea? We are going to solve global warming, yet let 2.7 billion people live on less than $2 a day? Even Stern had to cook the discount rate to make global warming a better investment than the UN’s Millennium Development Goals.

If you believe that green and growth go together:

- you believe that population will painlessly stop growing;

- every Briton will pay at least $333 each year forever to prevent global warming, or perhaps $850 or more;

- two centuries of high latitude infrastructure will be replaced, we will transform the UK’s £4 trillion housing stock – you’ve mothballed listed buildings such as this magnificent house;

- you’ve stopped funding all oil exploration projects – what’s the point? If we burn what we already have we exceed 1,000ppm;

- you’ve shorted most of the FTSE;

- you’ve given up skiing and holiday in Croydon.

Our distinguished journalist will be erudite and persuasive, coming from a magazine with a readership determined to make money out of any investment fashion, a magazine that thinks invading Iraq was a good idea

After her you’ll hear from a gifted Westminster speaker who loves another excuse for them to raise taxes from us. Our speaker’s party peddles a real whopper for children – more tax is good for you and good for the country.

Human nature means that green and growth don’t go together, whatever lies for children politicians and journalists want to sell. Let’s be The City and tell it like it is. You must vote for the motion.

Thank you.

Result:

Debate This House believes that Green and Growth Don’t Go

Pre Vote

- Yes 32

- No 65

- Undecided 3

Final Vote

- Yes 41

- No 58

- Undecided 1

Yes, Michael and Richard won.

Additional Motions:

This House believes that the answer to climate change is technology and not restraining consumer demand

- Yes 54

- No 40

- Undecided 6

This House believes that going nuclear is the answer to going green

- Yes 57

- No 35

- Undecided 8

This House believes that US policy is the biggest single problem to combating climate change

- Yes 63

- No 36

- Undecided 1

World Traders’ Installation Dinner 2006

A speech in honour of my dear friend, Jack Wigglesworth, on 12 October 2006 in Drapers’s Hall – I wound up becoming a World Trader because of this talk… Continue reading

Independence From East Surrey

East Surrey Business Club

4 July 2006 – The House of Lords

Your Excellencies, Distinguished Guests, my Lords, Ladies, and Gentlemen. It is truly an honour to share with you the outstanding hospitality of that most kind Scottish Laird, Lord Lindsay. Last year my wife and I spent most of the time dropping such casual gems as, “did we mention we saw Cream in concert earlier this year.” For weeks now I’ve been subtly dropping lead bricks such as, “terribly sorry but I can’t join you on the 4th of July as I’m busy that night giving a speech at the House of Lords”. It is a great honour for an American to have the opportunity to talk in this humbling setting, even if it is “at the House of Lords” rather than “to the House of Lords”, but I found some reactions to the idea a little confusing. Despite mentioning the tradition of picnics and fireworks while dropping the fact that I’d be speaking on Independence Day, all of my British friends kept referring to their special day on the 4th of July as a huge bout of Thanksgiving.

Of course, I speak not of Independence Day the film, nor of England’s freedom from 13 burdensome colonies, but of America’s freedom, had it remained part of the United Kingdom, from today’s Scottish Rule.

But the biggest honour, and my greatest pleasure, is in thanking each and every member of the East Surrey Business Club and their guests for joining in this celebration of the special relationship between the UK and the USA on this most memorable day, Independence Day. Britain and America do have a special relationship, well worth celebrating, but I wondered why me rather than some more distinguished speaker with a better accent, but I realised why after I spoke with Geoffrey Sanderson. Geoffrey explained that East Surrey is blessed with some fine locations you use each year for events. East Surrey holds Hever Castle of Anne Boleyn, King Henry the Eighth and Astor family fame; Chartwell, home of Winston Churchill. But it was the Gresham connection that mattered. Titsey Place is closely linked to Sir Thomas Gresham, who in 1579 founded Gresham College where I have the privilege of being Mercers’ School Memorial Professor of Commerce. Then, at the other end of the scale using Gresham family symbol of the insect Sir Thomas Gresham himself made famous, and still found throughout the City of London, The Grasshopper Inn, home to the largest singles night in the southeast on a Friday!

In order to stay on message and explore the special relationship, I’d like to start with a wonderful story that the great gag writer, Barry Cryer, tells about that fantastic, native comedian Tommy Cooper. When Tommy Cooper was on military service with the Horse Guards he was assigned palace sentry duty but fell asleep standing inside his sentry box. While in the middle of this court-martial-able offence, he half opened one eye to see his Commanding Officer and the Regimental Sergeant Major fast approaching to discipline him. Closing his eye again he sought a single, killing word that might free him from this predicament. He shook himself, drew to his full height, opened both eyes and then said the one word that could save him – “AMEN.”

So let us find that one killing word for the special relationship. I thought, particularly given tonight’s venue, we could talk about how special our politicians are, but as I moved into the modern era I was left contrasting Churchill or Thatcher with Carter, Ford, Clinton or Bush. So I had to move on to other topics in my search for that one special word, or as Dan Quayle pointed out – “One word sums up probably the responsibility of any vice president, and that one word is ‘to be prepared’.”

So what is the special relationship? Everything important I’ve learned in life I’ve learned from one book, my cheque book. And the special relationship has cost me, having lived in the UK for over a quarter of a century. But digging through my cheque stubs failed to produce much insight, except that things in London always cost more so, as an ersatz academic, I thought I should conduct some further research, and picked up a copy of a booklet from 1942, “Instructions for American Servicemen in Britain”, published by the US War Department and issued to American GIs going to Britain in preparation for the invasion of occupied Europe. It warned about the meanings of “bum”, explained British frugality, praised British toughness and distinguished reserve from unfriendliness.

The booklet referred frequently to “Tommies”, so I wondered if we could start looking for that special word for the special relationship in slang. Everyone has a particular, endearing little phrase they use with those whom they love, “sweetheart”, “darling”, “dearest”. This is perhaps best seen at breakfast. An Australian, an American and an Englishman are breakfasting with their wives. The Australian asks, “pass the shugah, Sugar.” The American requests, “pass the honey, Honey”. The Englishman says, “pass the tea, Bag”.

For those foreigners whom we love, both Americans and Britons have special, delightful, diminutive terms of endearment that can get people all worked up, such as “frog”, “kraut” or “dago”. But for each other? “Limey” and “Yank”. Limey is presumed to refer to the use by British sailors of lime juice as an antiscorbutic. However, it is a bit difficult to get worked up about someone trying to put you down about your use of preventative medicine. The origin of Yankee is more obscure, possibly coming from the Dutch referring to New England settlers as “Jan Kees” or commoners. To the Dutch, New England’s English settlers’ strangest characteristic was constantly cooking pies, leading to a humorous aphorism by E B White:

To foreigners, a Yankee is an American.

To Americans, a Yankee is a Northerner.

To Easterners, a Yankee is a New Englander.

To New Englanders, a Yankee is a Vermonter.

And in Vermont, a Yankee is somebody who eats pie for breakfast.

As you can see, by White’s definition virtually no one in the USA is a true Yankee, so no one takes offence. By way of contrast, living in the East End I can manage to take offence at “Yank”-”septic tank”-”seppo”. I far prefer the Chinese word for American, mei-guo-ren, “people of the beautiful land”. Sadly, insults have moved even further forward these days. The popular word to describe American on the internet sounds like the way a Westerner describes himself over here, “merrekin”. However, kids these days know what they’re doing so “merkin” is spelled “m-e-r-k-i-n”, and the internet kids know exactly what that means, a pubic wig. So perhaps we should look elsewhere for our special descriptive word of the special relationship.

Is it that Greek word, oxymoron? There are many British oxymora:

British plumbing

British punctuality

British sexuality

There are at least as many American oxymora:

American culture

American irony

Republican party

American English

We even share oxymora – British and American intelligence. But I don’t think that “special relationship” is oxymoronic. It’s a real concept, and not just around tax time or battle time.

Perhaps the word is trustworthy. But then I remember that both countries have helped found the professions based on mistrust, chartered surveying, chartered accountancy and chartered actuaries, just to get started. If we really want to add insult to injury, then we have to remember that while the common law legal system may be one of Britain’s finest exports, it keeps lawyers in holiday homes. The French always remarked that the sun never set on Perfidious Albion’s empire because God didn’t trust Brits in the dark. I suspect they have a similar expression for American pseudo-imperialism today.

Perhaps the word is “spiritual”. Surveys show that one of the things about America that increasingly scares Europeans is the contrast between America’s secularity in law and overt evangelism in practice. Britain too is covered with religious fervour from Orkney’s stone circles to Stonehenge to Ely Cathedral, but then I remember why God gave such a non-religious people as the English cricket – so they could develop a sense of eternity.

With a German wife I’m constantly reminded “don’t mention the war”, but perhaps that special word is “war”. Two World Wars have been fought shoulder-to-shoulder and many conflicts shared from the Falklands to the Gulf to Afghanistan to Iraq. There have also been a few confusing episodes from the War of Northern Aggression to China to Suez. Both have much to be proud of, and a few incidents to regret. We jointly celebrate the selfless efforts of our brave parents and grandparents, and, quite rightly, renew our gratitude for their sacrifices. Less remarked upon is that the British and the Americans have fought four wars against each other – the War of Independence which we’re kind of celebrating tonight, the War of 1812, and for those historians amongst you, the Northeast Aroostock War of 1838 and the Northwest Pig War of 1859. Fortunately, in two of the four wars there were no casualities, unless you count the pig.

If I couldn’t mention the war, perhaps I could advance through technology, Vorsprung durch Technik as they say in the car trade. Britain and America share quite a bit of common culture in inventiveness and science, from Bacon through to those other Gresham professors Wren and Hooke to Faraday to Edison to the Wright Brothers to Crick and Watson, or Watson and Crick as you say here. Briton’s respect pluckiness and doughtiness, similarly Americans admire “can do” attitudes and Yankee ingenuity. However, having spent several years working in the UK Ministry of Defence’s research laboratories, I don’t want to get dragged again into “who invented it first” wars, though some of you may find it interesting to know that Farnborough’s Royal Aircraft Establishment was founded by the American showman, William Cody.

I wondered if national mottos might help, but there’s quite a difference between “In God We Trust” and “Honi Soit Qui Mal Y Pense”. One is fairly obvious while the other seems to say “shame upon him who thinks evil of it” – or I’ll thump ‘em. I wondered about government. There are many shared government characteristics. My firm, Z/Yen Limited, is a special think tank and research organisation in the City of London where we work on a variety of topics with investment banks, technology firms and NGOs, including things such as trying to develop a Museum and Walk of Commerce & Finance for the public. Because of our involvement in the City, I had the delight of being the Chairman of the Broad Street Ward Club a couple of years ago, a traditional post since at least 1278 and part of the oldest unbroken record of democracy, the City of London’s, closely followed by Parliament itself. I think of the Magna Carta and the Constitution, but the differences are very real too. One constitution is written, one impenetrable; one country has a monarch; one country is highly decentralised. Sometimes the differences are greater than the commonality.

Yet both countries are grounded on the big theme of Independence Day, Freedom. Mark Twain, in remarks to London’s American Society 99 years ago, said:

Let us be able to say to old England, this great-hearted, venerable [nation], you gave us our Fourths of July, that we love and that we honor and revere; you gave us the Declaration of Independence, which is the charter of our rights; you, the venerable Mother of Liberties, the Champion and Protector of Anglo-Saxon Freedom – you gave us these things, and we do most honestly thank you for them.

Given Twain’s reverence, I would like to read a short section from the unanimous Declaration of the Thirteen United States of America in Congress on July 4th 1776, some 230 years ago:

When in the Course of human events, it becomes necessary for one people to dissolve the political bands which have connected them with another, and to assume among the powers of the earth, the separate and equal station to which the Laws of Nature and of Nature’s God entitle them, a decent respect to the opinions of mankind requires that they should declare the causes which impel them to the separation.

We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty and the pursuit of Happiness. That to secure these rights, Governments are instituted among Men, deriving their just powers from the consent of the governed …

OK, the Declaration of Independence loses rhetorical impact from this point on, going on to whinge quite a bit about George III’s taxation and unreasonableness, but there is an interesting point here, and that is how different cultures put different meanings into “freedom”, while some cultures share very similar meanings. This declaration was written from one people to another. Note this again, “a decent respect to the opinions of mankind requires that they should declare the causes which impel them to the separation”. It is a letter that could only be written from one people to another who shared so much in common. Peoples who believed in freedom of thought, freedom of speech, personal responsibility, habeus corpus and fair trials.

On the Continent, we have the concept of “freedom to”. In Britain and the USA, we have the concept of “freedom from”. Or expressed another way, we tend to follow the libertarian maxim of “whatever is not explicitly forbidden is permitted”, while on the Continent, and in many other countries, people tend to follow the authoritarian maxim of “whatever is not explicitly permitted is forbidden”.

Deeply held freedoms should lead not to shrill repetition that hollows out meaning, rather freedoms should lead to tolerance. Tolerance of others, respect for their opinions, their rights and their values. Even the summer torrent of US tourists, clad in plaid should be chatted to, their opinions respected, and then fixed.

The Instructions for American Servicemen praised British fair play and tolerance. The British are a tolerant people – and for that we love them. They are tolerant to all foreigners, having us in their land, allowing us to make fun of them and to share their sense of humour and fair play. It may not look it in this 21st century, but Americans have their own version of tolerance. Past the embarrassment of US Customs, I think most visitors to America would agree that Americans are tolerant. The problem today is that Americans are losing their reputation for tolerance due to their own actions. Sure, the British Constitution may be unwritten, but an unwritten constitution that provides freedom and tolerance is worth infinitely more than a written constitution speaking volumes but offering only lip service. America is losing its reputation for tolerance not just in geo-politics, but also in business. Calvin Coolidge, the 30th President of the USA, popularised the idea that “the business of America is business”. Extra-territorial actions on corporate activities; global impositions such as Sarbanes-Oxley; absurd anti-money-laundering regimes that ignore the data they request; or unilateral anti-dumping confusion worthy of Peter Mandelson himself – America is in danger of losing its reputation for fair play in business. More and more the business of America is seen to be regulation, in America’s favour.

Closely related to tolerance is a pragmatic view of the world. America seems to be in danger of losing its pragmatism and its tolerance. It is at just this point that I want to ask for your assistance. A special relationship is a bit like a marriage, only a spouse can tell a husband (sic) certain things. I think we need to have more constructive British criticism of America’s changing business practices. We should rebel against “taxation without representation”. We business people need to be vigilant against intolerance. Our cry for independence should be, “the only thing of which we are intolerant is intolerance itself”!

Sure, in the short-term we can all take advantage of American firms’ excessive legality, their self-centred views of the world or their callous imposition of their culture, but ultimately we will all lose. In some ways, only Britons can tell Americans when they have gone too far and insulted someone, almost always unwittingly, or gone too far, mostly through ignorance, and impeded their own objectives. We need to encourage American businesses to compete globally. As Mark Twain also remarked:

Travel is fatal to prejudice, bigotry and narrow-mindedness … Broad, wholesome, charitable views of people and things cannot be acquired by vegetating in one little corner of the earth in all one’s lifetime.

We must press the cases loudly and clearly with US businesses for fair trade, sensitivity to other cultures and respect for the environment. We must also press the case for America here, where one in five young Britons dislikes America ab initio. We must deepen, strengthen and complicate our Anglo-American clan. If we, Americans abroad and the Britons of the special relationship, can get American businesses improve their respect for other cultures, other governments, other legal systems and other taxation systems, then as Dan Quayle noticed, “the future will be better tomorrow”.

Churchill said that a fanatic is someone who won’t change his mind and won’t change the subject. I won’t change my mind, but I can close the subject.

Perhaps I was wrong to focus on Tommy Cooper and a single word – the special relationship is really founded on a mutual respect for two words – freedom and tolerance.

Or as Tommy Cooper might say, a sincere “AMEN”.

Honoured companions, it gives me great pleasure to ask you to be upstanding and drink a toast to – our Special Relationship.

Thank you.

All Aboard For Clays In Central London

Qurmudgeonly Questionnaire

Our family’s 2005 attempt to celebrate our father’s birthday led to the organisation of a Curmudgeon Conference, backed up by solid market research and pricked by a porcupine …

1) Do you consider yourself a curmudgeon? Please explain why or why not.

If you want simple answers, go ask simpletons. I must say that I do object to the motto – “Prickly Don’t Mean Ornery” – yes it does! At the end of the day, it all has to start somewhere. Besides, who’s asking? And what business is it of yours?

As for mankind’s excesses, well starting with anti-terrorism and the dot.bomb bubble, it’s going to take too long to explain why I see it as one of our prime goals on earth to puncture, preferably with a sharpened umbrella tip, all of our fellow humans’ pretensions, or fellow humans for that matter.

As another old proverb avers, “A man with one watch knows the time. A man with two watches is never sure.” I see myself as that watch. My modest task is to make curmudgeonliness the dominant body of thought on the planet. I have assumed this task with the humility expected of me.

2) Do you consider yourself a cynic? If you answer differently from #1 – why is that?

Certainly not. A reasonable Webster definition is – a fault-finding captious critic; especially : one who believes that human conduct is motivated wholly by self-interest – clearly does not apply. For instance, I believe that human conduct is also motivated by stupidity, lust, misapprehensions, delusions and a small smattering of illicit substances. There is only one law, the law of unintended consequences. In the spirit of Diogenes, I would prefer to be regarded as a kynic. To quote at length from a learned article:

“Obviously the lacuna here is the absence of guidance on how to generate alternatives.” [Checkland in Open Systems Group, 1972, page 292] “Traditional scientific method, unfortunately, has never quite gotten around to saying exactly where to pick up more of these hypotheses.” [Pirsig, 1974, page 251] At a broader level, cynical reasoning comes in for much criticism directed at the promotion of change through contention. “In its cheekiness [ancient kynicism] lies a method worthy of discovery. This first really ‘dialectical materialism’, which was also an existentialism, is viewed unjustly . . . that . . . respectable thinking does not know how to deal with.” [Sloterdijk, 1988, page 101]. Overall, the difficulty is that to have a system is to have constraints, but constraints restrict change.”

The article continues much later:

“One point of view worth exploring is the “kynical”. The kynic is a person who holds that by being what you are, you achieve what you seek. To the kynic the act of analysis, a cynical task, is destructive whereas the act of existence is creative. The kynical/cynical dichotomy is an old one. While the kynic rebels against analysis, the cynic is committed to analytical approaches. One of the most famous, long-running kynical/cynical disputations was between Diogenes and Plato. The kynical view is not confined to Western philosophy, in fact it might even be said to be more Eastern than Western. The precepts of many Eastern philosophies recognise the limitations of analytical thought and the need to compensate for the destructive effects of the analytical by seeking holistic views. These holistic views, it is frequently maintained, cannot be taught. The apotheosis of this view can be seen in philosophies such as Zen, where enlightenment is sought by denying analytical thought. A holistic view is achieved by transcending the limits of analytical thought processes, e.g. by contemplating and realising the implications of a question such as “what is the sound of one hand clapping” or a paradox which forces one to go beyond verbal reasoning such as “to win is to lose” [Humphreys, 1984, page 15]. A flash of insight purportedly leads to enlightenment, as illustrated in many Zen stories where analytical techniques beloved by a student are repeatedly frustrated by his or her mentor to the point that the student achieves enlightenment. These are not solely Eastern ideas. There are echoes of kynicism among current Western philosophy, e.g. “our supreme insights must – and should! – sound like follies, in certain cases like crimes…” [Nietzsche, 1990].

The kynical view is, on its own terms, perhaps best-examined by example rather than analysis. In attempting to analyse and classify species, Plato maintained that man was a featherless bi-ped, thus distinguishing man from the birds and from mammals who walked on four legs. Diogenes, the kynic, refuted the classification with a plucked chicken…”

As I never fail to point out, “somebody else always said it first, better and more succinctly” (Somebody 1958).

3) Is the curmudgeon a reformer or a self centered egotist?

Well naturally the curmudgeon is a reformer. In fact, the high tragedy of curmudgeonliness is that the reformer continues to work realising the futility of the task, but drawn to it by an inner nobility. A bit like Sisyphus with morals. In fact it has led to my profession – I’m a consultant; I can’t see anything I don’t want to change. A lifetime in business has taught me that people are reluctant to admit that their original choices and opinions might be in error – of that I’m sure. As I like to point out – it’s easy to confuse simple and easy – it’s simple to confuse easy and simple.

4) What has been bugging you recently as you go through your daily routine?

Why is it that you can put shaving cream safely on your toothbrush in the morning, but not safely put toothpaste on your razor? I’m also bugged by the current craze of stalkers. Originally, stalkers were praised for their ability to sneak up on game. So if the press goes on about some extreme stalker, you have to ask, “if they’re so good, why can people see them?”. I’ve got a great, beautiful stalker who’s so good I’ve never seen her – Liv Tyler. Finally, I can’t get over these annoying assertiveness training programmes. All these guys come back saying “I’m an Alpha male.” They ought to ask for their money back ’cause they’re not saying “I’m THE Alpha male.”

5) What annoys you about people in the supermarket?

I wouldn’t ride Sunday’s pig to Saturday’s market to buy the applesauce. If I can spend a euphemism – low hanging fruits is a phrase I find over-ripe in describing shoppers, store assistants, cashiers, bag boys, managers, parking lot attendants… As the Greek intellectuals pointed out, there are three stages of man, the curmudgeon, the philosopher and the sophisticate. These are exemplified by the three key statements:

- whine me?

- why me?

- wine, why not?

There are three types of people in the world – those who need help, those who don’t, and those who believe in triage. Sometimes I think that everything’s fine. Sometimes I think that the supermarket would be improved with an Uzi. Who is to say? Remember I told you so. Also, remember to forget about this.

6) What product have you bought (at any time) that you will never buy again, and what is the reason?

A piece of the Greenland icecap. Obviously for ethical reasons. Curmudgeon = WYNGER = why you never get everything required when you buy things.

7) What do you think of Christmas?

Still waiting for the Second Coming, along with the rest of us. If Christ had been Noah, cockroaches would rule the earth. Bet three of them come along at once! Christmas could get really gruesome if it turns out God is an atheist. Anyway, if you want to stop Christmas, just remove the batteries.

8) What do you think of children?

W C Fields was a Somebody [see answer to question 2]. Michael Jackson was even more of a somebody. While I recognise that “it all begins with babies” (from a conversation I had a couple of years ago on nursery demographics), I think that children take the joys of parenthood and turn them into overheads. One of the things I love about nanny-cams is that they beat them too.

How about parents. My father and mother are now over 70 and refuse to act their age. They travel around seemingly imagining they’re in their 40’s. How preposterous! They’re at least 50 years older than me!

9) Do you like living in your town? How would you describe your town? What is your assessment of the character of the people who live in your town?

Some things you can appreciate now; others you have to wait till they appreciate. Basically, can’t afford to move. London? As they say, when a man tires of London, he’s tired of looking for a parking space. According to the School of Instant Appreciation – “nothing created by man can’t be appreciated in 10 minutes.” Take it while it lasts. There was a news article saying the Greenland ice cap might entirely melt by the end of the century (couldn’t have used that headline in 1999!). This place will have a great view when it’s all built up.

With the dearth of real crime we now have a permanent morgue on our television with cop shows of all forms. I’m beginning to hope CSI find my body in front of the TV so my wife can serve her time. I intend to get a CSI “living will” card – “Don’t touch this body. Let a professional do their work.”

10) Tell us about a hypochondriac tendency that you have?

Mark Twain’s contrarian, anti-portfolio saying (from Pudd’nhead Wilson): “Behold, the fool saith, ‘Put not all thine eggs in the one basket’ – which is but a manner of saying, ‘Scatter your money and your attention’, but the wise man saith, ‘Put all your eggs in the one basket and – watch that basket.’” Naturally, that’s how I feel about my health and I would encourage you to feel likewise, or else.

There is an old Groucho Marx joke that Woody Allen recycled to explain the inevitability of amorous relationships. A guy goes to a psychiatrist: “Doctor, Doctor, my brother thinks he’s a chicken. Can you help?” “Why don’t you stop him?” “We need the eggs!”

Obviously, any profession that is incapable of running a hen coop is rather low in my pecking order. My father always said to use the right tool for the job. I just wonder why that tool was always a hammer. Besides, I have no empathy with empathy.

[End of Questionnaire – one joke short of a punchline]

It’s at times like these I get a feeling of deja nu. I’ve seen a questionnaire like this before and I’m answering it in the nude.